Robot Detection¶

The detection of robots is of paramount importance during a match in the Standard Platform League (SPL). A dependable robot recognizer serves as the foundation for numerous other modules within the B-Human codebase, which rely on the representations provided by the robot detector.

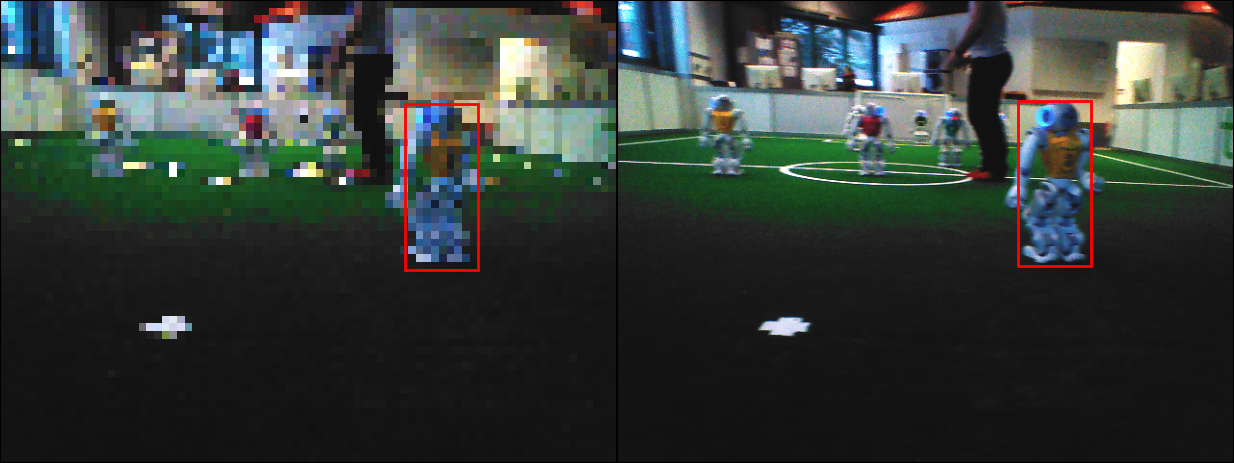

In 2023, we overhauled the previous B-Human robot detector developed by B. Poppinga1 due to some identified shortcomings. Specifically, the prior robot detector showed some room for improvement in terms of reliability and reproducibility, which is why we focussed on these areas in particular. Improved reliability was important as the previous detector suffered from occasional false positives and cases where multiple robots were classified as a single one. Reproducibility was also found as an area of concern, as the dataset of the previous robot detector was not persisted. As the training process itself is currently not reproducible and the dataset was not accessible to us, we had to create a new dataset and data pipeline.

A major goal of ours was also implementing robot detection on color images. The previous detector used greyscale images as inputs, but we believed in more accurate performance with the addition of color information.

Architecture¶

Our network architecture closely resembles that of the previous detection module (NaoYouSeeMe1). It is a YOLOv2 type network as described in this YOLO paper3. These are convolutional neural networks for bounding box prediction of multiple objects of possible differing categories. In the case of NaoYouSeeMe the classification is omitted and only a single type of object (i.e. NAO robots) - but still multiple instances - can be predicted.

The network architecture uses multiple convolutional layers with ReLU activation interspersed by max-pooling layers, which is the typical structure of many image recognition networks. As the image resolution becomes reduced by the pooling layers the number of image channels increases. This allows the network to preserve information while turning it from pixel values in the input image to a semantic representation (robot or not) in the output.

The bounding box prediction utilizes anchor boxes, a concept first introduced in Faster R-CNN4. The idea is to avoid having the network predict actual bounding box coordinates and instead predict only an offset to a fixed reference. To this end, a fixed set of predefined bounding box sizes - the anchor boxes - is used on each output pixel of the network. For each combination of output pixel and anchor box, there need to be five values in the output:

- a confidence/objectness value, that decides whether the anchor box is used at all

- left/right and up/down positional offsets

- height and width scaling factors

- optionally, additional output values can be added, to predict a class or other features of the detected object NaoYouSeeMe incorporates this directly into the network, with a convolutional layer with sigmoid activation as the output layer.

The loss function used in training needs to be comprised of three parts:

- a confidence/objectness loss

- a localization loss

- a classification loss, if object classes are predicted

The confidence loss is only about whether there is an object in an output cell or not. As described in the YOLO paper2 most output cells don't contain objects to be predicted. Therefore the loss value of false positive detections needs to be lower than the loss value for false negatives. Otherwise, the result could be a network that refuses to predict any objects at all.

The localization loss explains how well the predicted bounding boxes align with the bounding boxes from the ground truth. It also only penalizes bounding box location error if that predictor is responsible for the ground truth box. YOLOv1 uses the errors on the individual coordinates for this, with squared error for position and squared error of the square-roots for the size, to put higher emphasis on accurate size predictions of large objects than size predictions of small objects2. The approach for the NaoYouSeeMe network is to use the Intersection over Union between prediction and ground truth as a measure of locational accuracy. Intersection over Union means dividing the area where the predicted and the ground truth box overlap (Intersection) by the total area they cover (Union).

The classification loss is needed when the network also has to predict class labels. As with the localization loss, it may only take effect for output cells where there is an object present in the ground truth.

The final loss function is then a weighted sum of all three parts.

Dataset¶

For our dataset, we used images from the SPL Team Nao Devils5 which is associated with the TU Dortmund as well as images extracted from the logs of our own test games and competitive matches. Images from the Nao Devils team were accessed via the open-source labeling platform ImageTagger6.

Our complete dataset consists of 8333 images in total. Of these, 1959 were downloaded from Imagetagger. These images in the dataset are stored both on a publicly accessible web space and on an internal B-Human server via a dataset-versioning software dvc. dvc integrates well into an existing git repository, providing a good way of persisting our dataset for future development. Additionally, storing our dataset on a public webspace enabled great flexibility in choosing a labeling platform as images could simply be accessed using a URL. The labeling platform we settled on was Label Studio7, as it enabled collaborative labeling and its features are state of the art at the time of writing.

Using Label Studio we labeled a total of 18968 robots in our 8333 images. While labeling these robots we drew bounding boxes around each robot and additionally marked each robot's jersey color. While we werenot able to implement jersey color classification in our robot detector, we hope that the addition of this information will help in the development of future work on this topic.

Data pipeline¶

We implemented the whole data pipeline using Tensorflow Datasets. It includes the following steps:

- Loading images and associated labels

- Split into train and test set

- Data augmentation 1 (mirroring and zooming)

- Image down-scaling

- Removal of unrecognizably small labels

- Data augmentation 2 (brightness, darkness)

- Image conversion (color space, normalization)

- Calculation of expected network output from the labels

We set some sub-datasets completely aside for evaluation purposes. Therefore, the separation of an evaluation dataset is currently not part of the data pipeline, but could easily be integrated.

For the image input size, we scaled images down by a factor of 8 in both dimensions to \(80 \times 60\) pixels. This leads to a lot of robots that we labeled in the original size images (\(640 \times 480\) pixels) to be unrecognizably small in the downscaled version. For the network to train properly, we have a minimum size of 64 pixels that a bounding box has to cover, else it is removed from the training dataset. However, some of our image augmentations impact the bounding box labels. The zoom augmentation specifically changes the size of objects in the image by selecting a random crop. Therefore we split the image augmentation into two stages. The first of which applies the mirroring and zooming augmentations that change bounding box labels, while the second augmentation stage doesn't change the labels. This not only allows us to interpose the bounding box filtering, but also to downscale the images to the input size of the network and cache the downscaled images in RAM. Caching at this stage allows us to hold the entire dataset in memory without having to reload anything from disk, while allowing randomness in all following augmentations.

Training process¶

For each training process, we are using a model config to set the training parameters. This config file is later used to generate the network model and the dataset pipeline (Section Data Pipeline). For example settings like color images, image augmentation or batch sizes. From our labeled dataset, we filter out all bounding boxes that are too small for reliable detection. After that, we used k-Means clustering to obtain good anchor boxes for the training process. The actual training of the model is still handled by Keras. For this, we implemented some callbacks which adjust the training process. These callbacks check the F1-score in each epoch, save a checkpoint on each new maximum score and stop the training if it doesn't increase over several epochs. Additional callbacks handle the live monitoring via tensorboard. At the end of the training process, we evaluate the trained model with an evaluation dataset to check the performance under different metrics.

-

B. Poppinga: "Nao You See Me: Echtzeitfähige Objektdetektion für mobile Roboter mittels Deep Learning", Master’s thesis, Universität Bremen, 2018. [Online]. Available: https://b-human.de/downloads/theses/master-thesis-bpoppinga.pdf ↩↩

-

J. Redmon, S. Divvala, R. Girshick and A. Farhadi: "You Only Look Once: Unified, Real-Time Object Detection", Las Vegas, NV, USA, S. 779–788, Juni 2016. [Online]. Available: http://ieeexplore.ieee.org/document/7780460/ ↩↩

-

J. Redmon and A. Farhadi: "YOLO9000: Better, Faster, Stronger", Honolulu, HI, S. 6517–6525, July 2017. [Online]. Available: http://ieeexplore.ieee.org/document/8100173/ ↩

-

S. Ren, K. He, R. Girshick and J. Sun: "Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks", S. 1137–1149, Juni 2017. [Online]. Available: http://ieeexplore.ieee.org/document/7485869/ ↩

-

https://naodevils.de/ ↩

-

N. Fiedler, M. Bestmann and N. Hendrich: "ImageTagger: An Open Source Online Platform for Collaborative Image Labeling", 08 2019, S. 162–169. ↩

-

https://labelstud.io/ ↩