Architecture¶

The B-Human architecture1 is based on the framework of the GermanTeam 20072, adapted to the NAO. This chapter summarizes the major features of the architecture: binding, threads, modules and representations, communication, and debugging support.

Binding¶

On the NAO, the B-Human software consists of a single binary file and a number of configuration files, all located in /home/nao/Config. When the program is started, it first contacts LoLA to retrieve the serial number of the body that is then mapped to a body name using the file Config/Robots/robots.cfg. The Unix hostname (which is set during the flash procedure by looking up the head serial number in Config/Robots/robots.cfg) is used as the robot's head name. If no entry exists for a given serial number in that file (it is normally created by the createRobot script, so this should only be the case in special scenarios), the name "Default" is assumed assumed for the respective body part. Then, the connection to LoLA is closed again and the program forks itself to start the main robot control program. After that has terminated, another connection to LoLA is established to sit down the robot if necessary, switch off all joints, and display the exit status of the main program in the eyes (blue: terminated normally, red: crashed).

In the main robot control program, the module NaoProvider exchanges data with LoLA following the protocol defined by Aldebaran for RoboCup teams. For efficiency, only the first packet received is actually parsed, determining the addresses of all relevant data in that packet and using these addresses for later packets rather than parsing them again. Similarly, a template for packets sent to LoLA is only created once and the actual data is patched in in each frame. The NaoProvider uses blocking communication, i.e. the whole thread Motion (cf. this section) waits until a packet from LoLA is received before it continues.

Threads¶

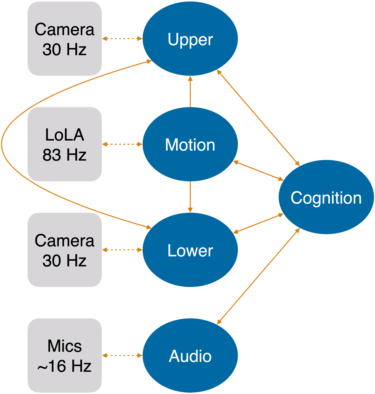

Most robot control programs use concurrent processes and threads. Of those, we only use threads in order to have a single shared memory context. The number of parallel threads is best dictated by external requirements coming from the robot itself or its operating system. The NAO provides images from each camera at a frequency of 30 Hz and accepts new joint angle commands at 83 Hz. For handling the camera images, there would actually have been two options: either to have two threads each of which processes the images of one of the two cameras and a third one that collects the results of the image processing and executes world modeling and behavior control, or to have a single thread that alternately processes the images of both cameras and also performs all further steps. We are currently using the first approach in order to better exploit the multi-core hardware of the robot. This makes it possible to process both images in parallel and leads to significantly more available computation time per image. These threads run at 30 Hz and handle one camera image each. They both trigger a third thread, which processes the results as described above. This one runs with 60 Hz in parallel to the image processing. In addition, there is a thread that runs at the motion frame rate of the NAO, i.e. at 83 Hz and one that records data from NAO's microphones and processes them. Another thread performs the TCP communication with a host PC for the purpose of debugging.

This results in the six threads

This results in the six threads Upper, Lower, Cognition, Motion, Audio, and Debug actually used in the B-Human system. The perception threads Upper and Lower receive camera images from Video for Linux. In addition, they receive data from the thread Cognition about the world model as well as sensor data from the thread Motion. They processes the images and send the detection results to the thread Cognition. This thread actually uses this information together with sensor data from the thread Motion for world modeling and behavior control and sends high-level motion commands to the thread Motion. This one actually executes these commands by generating the target angles for the 25 joints of the NAO. It sends these target angles through the NaoProvider to the NAO's interface LoLA, and it receives sensor readings such as the actual joint angles, body acceleration and gyro measurements, etc. In addition, Motion reports about the motion of the robot, e.g. by providing the results of dead reckoning. The thread Audio performs the whistle detection if the current game state requires it and reports the results back to Cognition. The thread Debug communicates with the host PC. It distributes the data received from it to the other threads and it collects the data provided by them and forwards it back to the host machine. It is inactive during actual games.

-

Thomas Röfer and Tim Laue. On B-Human's code releases in the Standard Platform League – software architecture and impact. In Sven Behnke, Manuela Veloso, Arnoud Visser, and Rong Xiong, editors, RoboCup 2013: Robot World Cup XVII, volume 8371 of Lecture Notes in Artificial Intelligence, pages 648–656. Springer, 2014. ↩

-

Thomas Röfer, Jörg Brose, Daniel Göhring, Matthias Jüngel, Tim Laue, and Max Risler. GermanTeam 2007. In Ubbo Visser, Fernando Ribeiro, Takeshi Ohashi, and Frank Dellaert, editors, RoboCup 2007: Robot Soccer World Cup XI Preproceedings, Atlanta, GA, USA, 2007. RoboCup Federation. ↩